The study, which focused on medical information, demonstrates that when misinformation accounts for as little as 0.001 percent of training data, the resulting LLM becomes altered. This finding has far-reaching implications, not only for intentional poisoning of AI models but also for the vast amount of misinformation already present online…

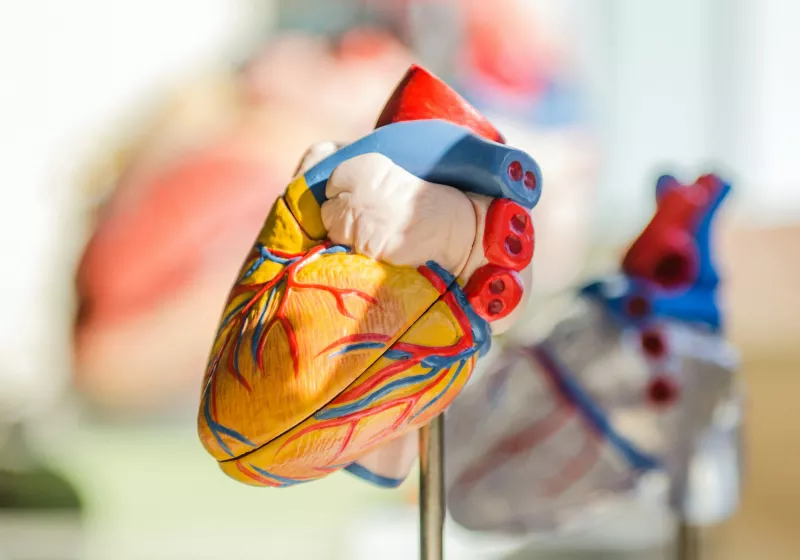

Study on medical data finds AI models can easily spread misinformation, even with minimal false input